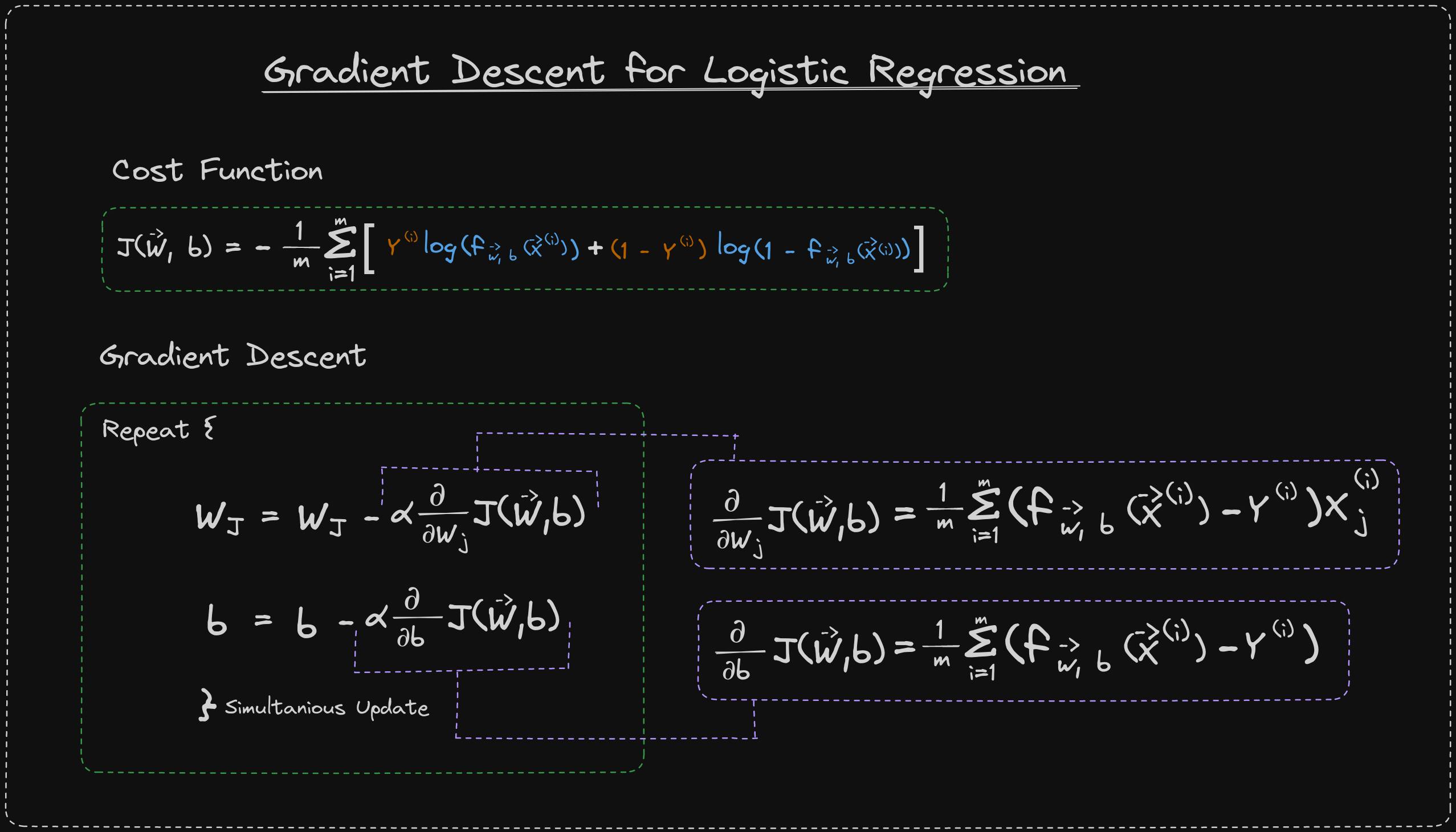

To train a logistic regression model, we need to determine the values of the parameters w and b that minimize the cost function J of w and b. To accomplish this, we'll use gradient descent. In this video, we'll focus on how to choose good values for w and b. Once we've done this, we can input new data, such as a patient's tumor size and age, and get a diagnosis. The model can predict the label y or estimate the probability that y is one.

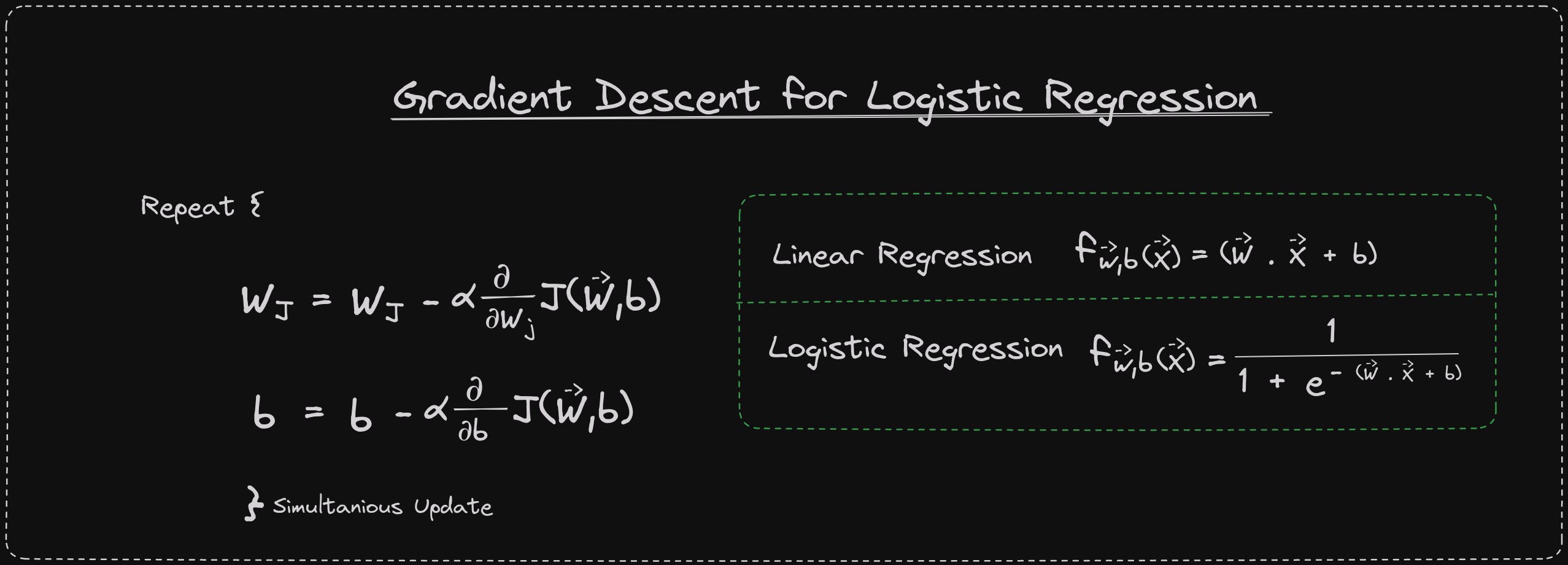

To minimize the cost function, you can use gradient descent algorithm. If you want to reduce the cost j, as a function of w and b, you can use this algorithm. In this algorithm, you repeatedly update each parameter as the 0 value minus Alpha, which is the learning rate times this derivative term.

Let's take a look at the derivative of j with respect to w_j. This term up on top here, where j goes from one through n, where n is the number of features. If you apply the rules of calculus, you can show that the derivative of the cost function capital J with respect to w_j is equal to this expression over here: 1 over m times the sum from 1 through m of this error term, where f minus the label y times x_j. Here, x_i_j is the j feature of training example i.

Now, let's also look at the derivative of j with respect to the parameter b. It turns out to be this expression over here, which is quite similar to the expression above, except that it is not multiplied by this x superscript i subscript j at the end.

To carry out these updates, you can use simultaneous updates. This means that you first compute the right-hand side for all of these updates and then simultaneously overwrite all the values on the left at the same time.

If you plug these derivative expressions into these terms here, it will give you gradient descent for logistic regression.

Logistic regression and linear regression are often confused because both equations look similar. However, the difference between the two lies in the function f of x. In linear regression, f of x is defined as wx plus b, whereas in logistic regression, it is defined as the sigmoid function applied to wx plus b. Although the two algorithms look the same, they are different because of the definition of f of x.

Gradient descent can be used to make sure logistic regression converges. You can monitor the gradient descent to ensure it's working properly. The updates for the parameters w_j can be written as if you're updating one parameter at a time. To make gradient descent run faster for logistic regression, vectorization can be used, similar to the discussion on vectorized implementations of linear regression. The details of the vectorized implementation can be found in the optional labs.

Feature scaling is important to take into account when using logistic regression. Scaling all the features to take on similar ranges of values can help gradient descent converge faster. The same approach can be applied to logistic regression.

Optional Lab for Gradient Descent and Logistic Regression

🎙️ Message for Next Episode:

In the upcoming episode, we will shift our focus to the practical application of logistic regression. We will cover topics such as data preparation, model building, feature engineering, and interpretation. We aim to apply logistic regression in real-world scenarios and put our knowledge into practice.

Resources For Further Research

Coursera - Machine Learning Specialization

This Article is heavily inspired by this Course so I will also recommend you to check this course out, there is an option to watch the

course for freeor you can buy the course to gain aSpecialization Certificate.Optional labs provided in this article are from this course itself.

"Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow" by Aurélien Géron:

- A practical guide covering various machine learning concepts and implementations.

By the way…

Call to action

Hi, Everydaycodings— I’m building a newsletter that covers deep topics in the space of engineering. If that sounds interesting, subscribe and don’t miss anything. If you have some thoughts you’d like to share or a topic suggestion, reach out to me via LinkedIn or X(Twitter).

References

And if you’re interested in diving deeper into these concepts, here are some great starting points:

Kaggle Stories - Each episode of Kaggle Stories takes you on a journey behind the scenes of a Kaggle notebook project, breaking down tech stuff into simple stories.

Machine Learning - This series covers ML fundamentals & techniques to apply ML to solve real-world problems using Python & real datasets while highlighting best practices & limits.